|

Article Information

|

Authors:

Nomasonto B.D. Magobe1

Sonya Beukes1

Ann Müller1

Affiliations:

1Department of Nursing, University of Johannesburg, South Africa

Correspondence to:

Nomasonto Magobe

Email:

nmagobe@uj.ac.za

Postal address:

PO Box 524, Auckland Park 2006, South Africa

Dates:

Received: 03 Dec. 2009

Accepted: 13 Dec. 2010

Published: 22 July 2011

How to cite this article:

Magobe, N.B.D., Beukes, S. & Müller, A., 2011, ‘Scoring clinical competencies of learners: A quantitative descriptive study’,

Health SA Gesondheid 16(1), Art. #524, 7 pages.

doi:10.4102/hsag.v16i1.524

Copyright Notice:

© 2011. The Authors. Licensee: AOSIS OpenJournals. This work is licensed under the Creative Commons Attribution License.

ISSN: 1025-9848 (print)

ISSN: 2071-9736 (online)

|

|

|

|

Scoring clinical competencies of learners: A quantitative descriptive study

|

|

In This Original Research...

|

Open Access

|

• Abstract

• Opsomming

• Introduction

• Problem statement

• Background and rationale

• Aim and objectives of the study

• Definition of key concepts

• Clinical competence

• Learners

• Clinical instructor and assessor

• Correlation of marks

• Research method and design

• Design

• Materials

• Sampling method, criteria and size: Learners

• Sampling method, criteria and size: Clinical assessors

• Data collection methods

• Data collection for learner profile (demographic profile data)

• Data collection of clinical assessors’ scoring of learners

• Observed clinical competencies during summative clinical assessments

• Data analysis

• Learner profile (demographic data)

• Clinical assessors’ scoring

• Ethical considerations

• Validity and reliability

• Validity

• Face validity

• Content validity

• Inferential validity

• Reliability

• Inter-rater reliability

• Observational reliability

• Results and discussion

• Learner profiles (demographic data)

• Gender distribution of learner respondents

• Age distribution of learner respondents

• Department in which learners worked during study

• Ability to practise clinical skills at the workplace during study

• Access to preceptor at the workplace during study

• Frequency of access to preceptor

• Type of accessible preceptor

• Clinical assessors’ scoring of learners during summative clinical assessment

• Limitations of the study

• Recommendations

• Conclusion

• Authors’ contributions

• References

|

|

This article reports the correlation between different clinical assessors’ scoring of learners’ clinical competencies in order

to exclude any possible extraneous variables with regard to reasons for poor clinical competencies of learners. A university in Gauteng, South

Africa provides a learning programme that equips learners with clinical knowledge, skills and values in the assessment, diagnosis, treatment and

care of patients presenting at primary health care (PHC) facilities. The researcher observed that, despite additional clinical teaching and

guidance, learners still obtained low scores in clinical assessments at completion of the programme. This study sought to determine possible reason(s)

for this observation. The objectives were to explore and describe the demographic profile of learners and the correlation between different clinical

assessors’ scoring of learners. A purposive convenience sample consisted of learners n = 34) and clinical assessors (n = 6). Data

were collected from learners using a self-administered questionnaire and analysed using a nominal and ordinal scale measurement. Data from clinical

assessors were collected using a checklist, which was statistically analysed using a software package. The variables were correlated to determine the

nature of the relationship between the different clinical assessors’ scores on the checklist to ensure inter-rater reliability. Findings showed

that there was no significant difference in the mean of the scoring of marks between clinical assessors after correlation (p < 0.05). Thus,

scoring of marks did not contribute to poor clinical competencies exhibited by learners.

Hierdie artikel beskryf die ondersoek na die korrelasie tussen verskillende kliniese assesseerders se puntetoekenning tydens assessering van

leerders se kliniese vaardighede ten einde enige moontlike vreemde veranderlikes met betrekking tot redes vir swak prestasie uit te skakel. ʼn

Universiteit in Gauteng, Suid-Afrika bied ʼn leerprogram aan wat leerders toerus met kliniese kennis, vaardighede en waardes in die beraming,

diagnose, behandeling en sorg van pasiënte in primêre gesondheidsorgfasiliteite (PGS). Die navorser het waargeneem dat, ten spyte van

addisionele kliniese onderrig en begeleiding, leerders steeds teen die einde van die program lae punte in kliniese evaluasies behaal het. Die studie

het die moontlik rede(s) vir hierdie waarneming ondersoek. Die doel van die studie was om die demografiese profiel van leerders, sowel as die korrelasie

van die puntetoekenning deur verskillende kliniese assesseerders, te verken en te beskryf. ʼn Doelbewuste gerieflikheidsteekproef het uit leerders

(n = 34) en kliniese beoordelaars (n = 6) bestaan. Data is versamel deur leerders individueel ʼn vraelys te laat voltooi.

Hierdie data is met behulp van ʼn nominale en rangorde-skaal geanaliseer. Kliniese assesseerders het ʼn kontrolelys voltooi en data is met

behulp van ʼn statistiese sagtewarepakket geanaliseer. Die veranderlikes is vergelyk om die aard van die verhouding tussen die verskillende kliniese

assesseerders se tellings op die kontrolelys te bepaal. Dit is gedoen om interbeoordeelaarbetroubaarheid te verseker. Resultate het getoon dat daar geen

statisties beduidende verskille tussen die gemiddelde puntetoekenning van verskillende kliniese assesseerders bestaan het na korrelasie nie

(p < 0.05). Die puntetoekenning het dus nie tot die swak kliniese vaardighede van die leerders bygedra nie.

Problem statement

Background and rationale

Nursing education institutions face the challenge of producing learner nurses who are clinically both competent and prepared for practice in the primary

health care (PHC) setting (Edwards et al. 2004). The South African National Department of Health (DoH) emphasises the competencies of all health

workers as stated in the Comprehensive PHC Service Package for South Africa. It states that ’no member of staff [including primary

clinical nurses] should undertake [clinical] tasks unless they are competent to do so’ (DoH 2001a:7).

The South African Health Sector Strategic Framework of 1999–2004 reiterates the importance of clinical competencies by stating that

’appropriately trained PHC nurses must be available in all public PHC facilities’ (DoH 1999:17). The South African university where the

study was conducted provides a learning programme at a post-basic level, namely ‘PHC: Clinical nursing, diagnosis, treatment and care’.

The programme equips learners with clinical knowledge, skills, attitudes and values in patient assessment, diagnosis, treatment and care and incorporates

clinical competencies of prescribing and issuing essential drugs included in the PHC essential drug list (schedule 1–4 drugs only) (DoH 1996:17;

SANC 1984:R2418).

The learning programme is regulated by the South African Nursing Council (SANC), a statutory body entrusted to set and maintain standards of nursing

education and practice as stated in the Nursing Act of 1978 (as amended; South Africa 1978). The SANC standards include the implied appropriate

rating of learners in measuring clinical competencies. The teaching and learning strategies of the programme include clinical supervision, guidance and

assessment by lecturers and clinical instructors who have personal clinical experience to enhance successful outcomes and ensure internalisation of the

clinical content and skill (Bezuidenhout 2003:19). On completion of the programme, learners should be able to ‘function independently [as clinical

nurse practitioners], with more limited referral to [a] medical practitioner’ (Beukes 1983:6; DoH 1996:18; DoH 2001b:23) in public PHC facilities.

The researcher (as the lecturer, clinical instructor and facilitator of the learning programme) observed that learners obtained low scores in clinical

competencies for the two successive academic years based on the minimum standard and critical programme outcomes of the clinical assessment tool, despite

the implementation of prescribed and additional clinical guidance and learning opportunities. Such low scores reflect the non-attainment of clinical

competencies, which negatively impacts on the quality of clinical PHC (DoH 1996:18; Mofukeng 1998:63).

Despite the implementation of prescribed and additional clinical guidance and learning opportunities, it was evident that learners continued to display

poor clinical competencies and obtained low scores during assessments. This raised the question of (1) what the reasons for low clinical competency scores

are and, specifically, (2) whether the low scores could be due to external variables within the learners’ demographic profile or the erroneous scoring

by different clinical assessors.

Aim and objectives of the study

The purpose of the research was to exclude any possible extraneous variables in the learner profile and clinical assessors’ scoring of

learners’ clinical competencies in the investigation of reasons for their poor results (Burns & Grove 2001:243; Mouton & Marais

1990:50). The study aimed (1) to explore and describe the demographic profile of learners registered for the programme in the 2003 academic year

and (2) to explore and describe the correlation of different clinical assessors’ scoring during summative clinical assessments of learners.

Definition of key concepts

Clinical competence

Clinical competencies in this study involve applied competence, which implies the integration of theory and practice (South African Qualifications

Authority 2000:16). In the context of the learning programme under study, the applied competencies refer to the further development of the learner’s

intellectual, practical and reflective competencies (knowledge, skills, attitudes and values), clinical assessment, diagnosis, treatment and care in PHC to

promote the health of the individual, group and community (Muller 1999, cited in Morolong 2002:8; RAU 2002:5).

Learners

Learners refer to professional (registered) nurses who are students and consumers of the specific learning programme. Learner participants refer to

students who were registered for the learning programme in the 2003 academic year (RAU 2002:5).

Clinical instructor and assessor

The clinical instructor is the qualified primary clinical nurse who acts as a clinical guidance provider and teacher to ensure that clinical learning

takes place (Morton-Cooper & Palmer 2000:197). The primary function of the clinical instructor and assessor in the context of this study is to facilitate

clinical teaching. It includes the assessment of clinical learning and the correct and relevant application of the learnt clinical skills (clinical competencies).

Correlation of marks

In the context of this study the correlation of learners’ scored marks refers to the systematic investigation of relationships between the

scores allocated by clinical assessors (Burns & Grove 2001:30; De Vos & Fouché 2001:226; Mouton & Marais 1990:44; Polit &

Beck 2004:467) with regard to reasons for poor clinical competencies observed during summative clinical assessment of learners registered for the

programme in the 2003 academic year.

|

Research method and design

|

|

Design

The study employed a quantitative, exploratory, descriptive and contextual research design (Burns & Grove 2001:61; Mouton 1996:103). Exploratory

and descriptive design methods refer to highly structured, in-depth statistical analysis of the profile (demographic data) of learners and the correlation

between clinical assessors’ summative clinical assessment scores of learners (Babbie & Mouton 2001:49; Polit & Hungler 1995:15).

The contextual nature of the study refers to its intrinsic interest within the immediate narrower context of a learning programme provided by a

specific university in Gauteng, South Africa. It therefore does not seek to generalise the findings to a larger population (Mouton & Marais 1990:49).

Adami and Kiger (2005) maintain that it is important to research education within specific contexts rather than seeking to extrapolate research evidence

and recommendations for nurse education from one context to another.

Materials

In quantitative research, a relationship is a connection or association between two or more variables that can be described through correlation

procedures (Polit & Beck 2004:467, 730). Correlation of scores was used to determine the nature of the relationship and to ensure inter-rater

reliability between scores awarded to learners by clinical assessors (three paired samples) during summative clinical assessments of learners

registered for the programme in the 2003 academic year (Burns & Grove 2001:30; Polit & Beck 2004:467).

Sampling method, criteria and size: Learners

A purposive convenience sampling method was used by selecting only consenting registered learners for compiling the demographic profile of

learners (Polit & Hungler 1995:638). The inclusion criteria determined that learners had to be academically active (i.e. attendance at

clinical supervision and guidance sessions during the 2003 academic year, as required by the learning programme guidelines (RAU 2002)) and

present at the summative clinical competencies assessment at the end of the academic year.

Sampling method, criteria and size: Clinical assessors

A purposive convenience sample of all consenting clinical assessors (n = 6) who were also willing to be involved in clinical assessment of

learners during the summative clinical competencies assessments was used (Polit & Hungler 1995:638).

Data collection methods

Data collection for learner profile (demographic profile data)

In view of learners’ literacy level and writing skills, the instrument used was a self-administered questionnaire (De Vos 2001:155). The

instrument contained variables which described various characteristics of learners that could significantly influence their clinical competencies

(Burns & Grove 2001:182), for example gender, age, access to preceptor, type of facility the learner is working in, et cetera. To avoid poor a

response rate, the instrument was developed to be brief and easy to understand and complete by participating learners, and also easy to score and

interpret by the researcher, as advocated by De Vos (2001:82).

Data collection of clinical assessors’ scoring of learners

An existing generic departmental summative clinical competencies assessment instrument in the form of a checklist (copyright reserved by University

of Johannesburg) was used to collect data during clinical summative assessments of participating learners. The checklist was administered by clinical

assessors, who employed structural observation during summative clinical assessments. Summative clinical assessment of learners is a structured

event for learners and clinical assessors, for which dates and times are set in advance (Polit & Hungler 1995:308). To familiarise learners with

the procedure of the clinical assessment, the procedure was explained and rehearsed during the last ‘ongoing’ clinical assessment.

The clinical assessment instrument has an ordinal scale consisting of exclusive and exhaustive categories with unequal intervals signifying an incremental

ability of the learner to demonstrate clinical competencies (Burns & Grove 2001:394–395; Polit & Beck 2004:452). The ordinal measure items,

as reflected in Table 1, inform a final score for the learner, which is recorded at an interval level of measurement.

Data were collected using ordinal rating scales that were quantified during data analysis (Polit & Hungler 1995:310). A score was awarded to the

learner by the clinical assessor during structured observations of clinical competencies of learners (Burns & Grove 2001:419). Each learner was

assessed by three pairs of clinical assessors (three paired samples). The clinical assessors were advised to function independently and not to communicate

during the structured observations. They were also advised not to collaborate or discuss their individual scoring of learners’ clinical competencies

(Burns & Grove 2001:801; Polit & Beck 2004:721) so as to avoid bias and ensure reliability of scoring by the individual clinical assessor and

validity of the clinical assessment instrument.

|

TABLE 1: Summative clinical assessment instrument scale.

|

Observed clinical competencies during summative clinical assessments

The clinical competencies that were specifically observed and scored are reflected in the summative clinical competencies assessment instrument

described earlier. The clinical assessment instrument was used to measure the inter-rater reliability (the degree of consistency of marks allocated

by clinical assessors) during summative assessments.

Data analysis

Learner profile (demographic data)

Learner profiles (demographic data) were analysed using a nominal and ordinal scale measurement. Nominal scale measurement data (of the self-administered

instrument) were organised into exhaustive categories that could not be compared or ordered. Each category was given a number which served as a label only

and could not be used for mathematical calculations (Burns & Grove 2001:393; Fouché 2001:167–168; Parahoo 1997:339–340).

Ordinal scale measurement refers to the allocation of numbers to a variable (as in nominal measurement), which allows sorting of the responses on the basis

of their standing relative to each other. No equal distance is implied between the different responses; the allocated numbers imply only a category of the

response within the variable being measured (Parahoo 1997:340–341; Polit & Hungler 1995:372).

Clinical assessors’ scoring

Data were statistically analysed by correlating variables to determine the nature of the relationships between the individual assessors’ scoring

as reflected on the checklist (Burns & Grove 2001:30; Polit & Beck 2004:467). Data analysis involved systematic investigation of relationships

between the scoring variables of clinical assessors (Burns & Grove 2001:30; De Vos & Fouché 2001:226; Mouton & Marais 1990:44; Polit &

Beck 2004:467). Correlating scores were meant to determine the nature of the relationships and thus ensure inter-rater reliability (the degree of consistency

of marks) with regard to reasons for poor clinical competency scores of learners (Burns & Grove 2001:30).

A basic parametric procedure for testing differences between group means was used (Polit & Hungler 1995:411). The researcher sought to obtain two

measures from the same subjects by allocating two clinical assessors per learner. Thus, a paired t-test was used to evaluate the mean allocated

scores per pair of clinical assessors for the same group of learners (Polit & Hungler 1995:411).

The following ethical principles, as prescribed by the former Rand Afrikaans University standards (RAU 2002), were adhered to during the study:

• right to privacy, confidentiality and anonymity

• right to equality, justice, human dignity and protection against harm

• right to freedom of choice, expression and access to information

• obligation to adhere to the practice of science.

In quantitative research methods, the only fundamental characteristic of a measuring instrument is its validity and reliability

(Hudson 1981, cited in De Vos 2001:82).

Validity

A valid instrument is described as one that measures the concept it is supposed to measure and yields accurate results (De Vos 2001:83).

The concept measured in this study is the demographic data of the learner and the degree of consistency of scores by clinical assessors

(inter-rater reliability) with regard to reasons for poor clinical competencies of learners. Strategies used to ensure measurement validity

are described in the following sections.

Face validity

Face validity verifies the fact that the instrument appears to measure relevant content (Burns & Grove 2001:798). The respondents (learners)

were willing to complete the self-administered questionnaire and the clinical assessors were able to use the clinical assessment checklist, because

the respective instruments both had face validity (De Vos 2001:84; Lynn 1986 and Thomas 1992, cited in Burns & Grove 2001:400).

Content validity

Content validity refers to the extent to which the questions in the questionnaire represent the phenomenon being studied (Parahoo 1997:270).

Several measures ensured content validity of the instruments:

• The self-administered demographic data questionnaire was piloted with two learners of a different programme and refined several times until

approved by the two study supervisors.

• Prior to undertaking the study, the summative clinical assessment instrument, together with its comprehensive manual, was tested and validated for

two successive years (2001 and 2002) by a subject expert and lecturer (who was also the researcher). During this time the instrument was modified and

finally accepted as a valid clinical assessment instrument for the learning programme under study.

• The same clinical assessment instrument was verified and validated by two senior faculty members, who are also experienced researchers

(Burns & Grove 2001:400).

Inferential validity

Inferential validity refers to the validity of the logical inferences made during the study (Mouton & Marais 1990:106–107). Inferential

validity was ensured throughout the study and the conclusions were inferred from the findings of the study.

Reliability

Reliability refers to the degree of accuracy (consistency, stability and repeatability) with which an instrument measures the studied attribute

(Mouton 1996:132; Uys & Basson 1995:75). A reliable instrument should provide values with a minimum amount of random error (Burns & Grove

2001:396; Polit & Hungler 1995:347). Measures applied to ensure reliability in this study are described in the following sections.

Inter-rater reliability

Inter-rater reliability refers to the degree of consistency between two assessors (Burns & Grove 2001:801; Polit & Beck 2004:721).

In this study inter-rater reliability was ensured by systematic exploration and description of the relationship between scores by the assessor

pair (three paired samples) who observed clinical competencies of a learner during summative clinical assessments. This method ensured

inter-rater reliability by correlating statistically significant relationships between the three paired samples of clinical assessors

(Burns & Grove 2001:30; Polit & Beck 2004:467).

Observational reliability

To ensure observational consistency, each learner was observed systematically and repeatedly by three pairs of clinical assessors

(six assessors in total). This, in turn, improved observational reliability (Denzin & Lincoln 1994:381). According to Kidder (1981),

as cited in Denzin and Lincoln (1994:381), this method is similar to a test–re-test comparison in an experimental research strategy.

Learner profiles (demographic data)

The data from the self-administered questionnaires (n = 34) were analysed using a statistical software package, namely the Statistical

Package for Social Science (SPSS). The findings are presented and discussed below. Further discussion of learners’ demographic profiles is

presented elsewhere (see Magobe 2005).

|

TABLE 2: Ability to practise clinical skills at the workplace for duration of programme (n = 34).

|

|

TABLE 3: Access to preceptor at the workplace for duration of programme (n = 34).

|

|

TABLE 4: Frequency of learners’ access to preceptors (n = 33).

|

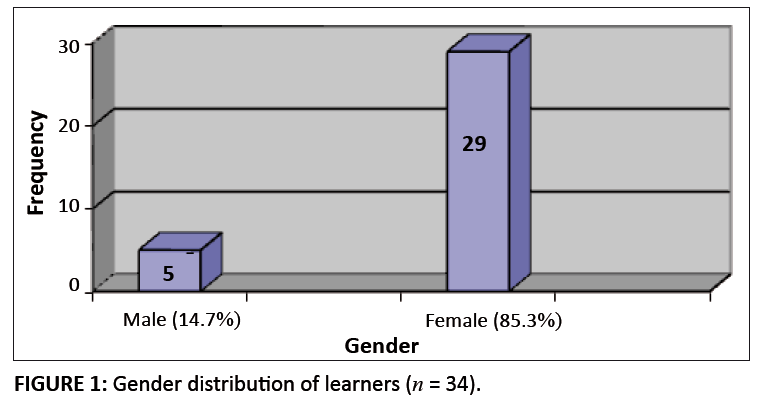

Gender distribution of learner respondents

There were more female than male learners. The SANC 2004 statistics reflect gender distribution of registered nurses as 93 012 females to 5478 males,

confirming female dominance in the nursing profession. The gender of participants did not contribute significantly to learners’ poor clinical competency scores.

Age distribution of learner respondents

Most respondents (n = 31, 94%) were between the ages of 30 and 59 years, whilst only 6% (n = 2) were between the ages of

20 and 29 years. Adult learners already have a wealth of clinical experience that they bring to the learning environment and this could be a hindrance

on its own – unlearning the ‘old way of doing things’ and learning new clinical skills. Such learners also undertake the programme

simply to gain greater fulfilment from their profession (Darkenwald & Merriam 1982:9; Knowles 1984:10) and to improve their clinical competencies

with regard to clinical PHC practice. Age distribution of learners did not have any significant contribution to learners’ poor clinical competency

scores.

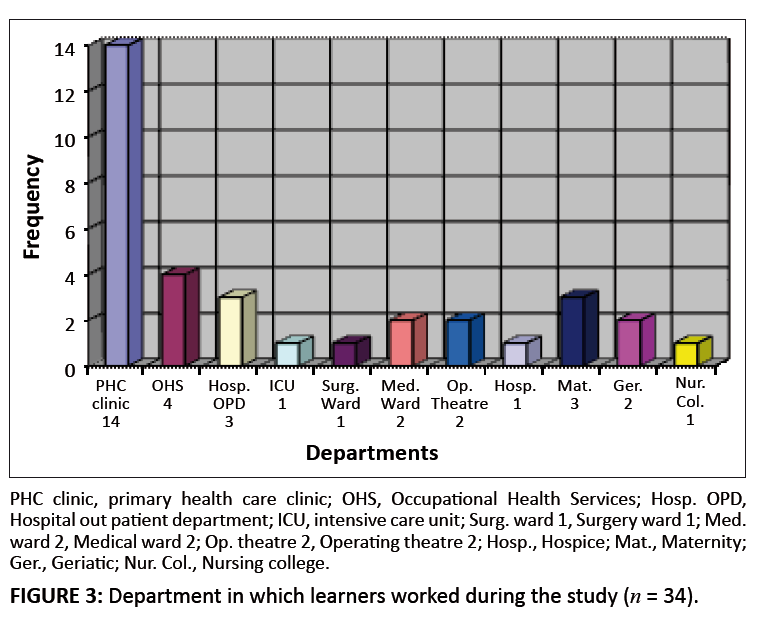

Department in which learners worked during study

The majority of learners (n = 21, 61.8%) worked in departments ideally suited to application of newly learnt PHC clinical skills, such as

PHC clinics (n = 14), occupational health services (n = 4) and hospital outpatient departments (n = 3) The qualitative aspect

of the main study (see Magobe, Beukes & Müller 2010) revealed that shortage of staff was, however, the major obstacle to practising the

appropriate clinical skills and thus contributed to poor clinical competency scores.

The rest of the learners (n = 13, 38.2%) worked in a non-PHC clinical practice environment and thus lacked clinical practice opportunities,

resulting in poor clinical competency scores.

Ability to practise clinical skills at the workplace during study

The majority of learners (n = 26, 76.5%) were able to practise their learnt clinical PHC skills at their workplace, which is supposed to improve

their clinical competency scores. However, because of factors such as a shortage of staff (see Magobe, Beukes & Müller 2010), almost half of the

learners (54.6%) were able to access a preceptor only once a week or less (Table 4), thus resulting in poor clinical competency scores.

Access to preceptor at the workplace during study

Findings indicate that 67.6% of learners (n = 23) were able to access a preceptor at their workplace for the duration of the programme.

However, actual preceptorship of learners could not take place owing to factors such as shortage of staff as depicted in the qualitative findings

of this study (see Magobe, Beukes & Müller 2010). Furthermore, almost half of the learner group (54.6%) could access the preceptor only

once a week or less, as shown in Table 4. This relates to a point made in the previous paragraph regarding poor clinical competency scores.

Frequency of access to preceptor

Less than half (n = 15, 45.4%) the learners were able to access a preceptor more than once a week ( > 4 times/month). This is a clear

indication that more than half (54.6%) the learners lacked clinical practice owing to their inability to access a preceptor as frequently as required,

which contributed to poor clinical competency scores.

Type of accessible preceptor

Almost a quarter (n = 8, 23.5%) of learners had preceptors who had a PHC clinical skills qualification. Two learners’ (5.9%)

preceptors did not have PHC clinical skills training and thus these preceptors did not have appropriate primary clinical nurse practitioner

qualifications to be preceptors for learners.

Andrews and Chilton (2000:555–556) explain that the clinical experience of preceptors without appropriate qualifications does not fully

compensate for the lack of the clinical and theoretical knowledge of the learner and therefore cannot adequately develop the clinical competencies of students.

Clinical assessors’ scoring of learners during summative clinical assessment

The data in Table 5 show that there was no significant difference between the mean scores allocated by the clinical assessors (p < 0.05).

The poor clinical competencies of learners thus appear not to due to variable scoring by clinical assessors.

|

FIGURE 1: Gender distribution of learners (n = 34).

|

|

|

FIGURE 2: Age distribution of learners (n = 33).

|

|

|

FIGURE 3: Department in which learners worked during the study (n = 34).

|

|

|

FIGURE 4: Qualifications of accessible preceptors (n = 23).

|

|

The limitations of the study were that only clinical instructors who were also clinical assessors participated in the study. Preceptors who work as

primary clinical nurses in PHC clinical practice and so also contribute to the clinical development of the learners but who were not fully accessible

to the learners, did not participate in the study.

It is recommended that the university and the PHC clinical practice should form formal partnerships in order to benefit from each other’s

strengths and so develop clinical competencies of primary clinical nurses. Such partnerships will improve clinical competencies of primary clinical

nurses while they are learners. Once qualified, they can, in turn, become preceptors and so improve clinical competencies of learners, which could

improve the general quality of care offered in PHC facilities (Haas et al. 2002:518).

According to Chalmers, Swallow and Miller (2001:604), such formal collaboration between the university and PHC clinical practice should include:

• common understanding of the purpose and proposed outcome

• articulation of clearly defined roles and responsibilities

• mutual trust with a common agenda

• shared values and beliefs

• appropriate management

• strategic planning.

Findings show that variables in the students’ demographic profile did not contribute significantly to their poor clinical competencies.

There was no significant difference between different assessors’ scoring (p < 0.05). The poor clinical competencies

of learners therefore could not be attributed to poor scoring by clinical assessors. Findings point to a lack of practice opportunities and in

some cases inadequate guidance by preceptors as reasons for learners’ poor clinical competencies.

Authors’ contributions

N.B.D.M. was the project leader and wrote the manuscript (article); S.B and A.M. were responsible for supervision and co-supervision of the project respectively.

Adami, M.F. & Kiger, A., 2005, ‘A study of continuing nurse education in Malta: The importance of national context’,

Nurse Education Today 25(1), 78–84. doi:10.1016/j.nedt.2004.08.004,

PMid:15607250

Andrews, M. & Chilton, F., 2000, ‘Student and mentor perceptions of mentoring

Effectiveness’, Nurse Education Today 20, 555–562.

doi:10.1054/nedt.2000.0464,

PMid:12173259

Babbie, E. & Mouton, J., 2001, The practice of social research, Oxford University Press, Cape Town.

Beukes, K., 1983, ‘Why PHC? And some pertinent problems associated with it’, paper presented at an exploratory meeting,

Kalafong Hospital, Pretoria.

Bezuidenhout, M.C., 2003, ‘Guidelines for enhancing clinical supervision’, Health SA Gesondheid 8(8), 12–23.

Burns, N. & Grove, S.K., 2001, The practice of nursing research: conduct, critique and utilization. W.B. Saunders, Philadelphia.

Chalmers, H., Swallow, V.M. & Miller, J., 2001, ‘Accredited work-based learning: an approach for collaboration between higher education

and practice’, Nurse Education Today 21, 597–606.

doi:10.1054/nedt.2001.0666,

PMid:11884172

Darkenwald, G.G. & Merriam, S.B., 1982, Adult education: foundations of practice, Harper & Row, New York.

De Vos, A.S. & Fouché, C.B., 2001, ‘Data analysis and interpretation: Bivariate analysis’, in A.S. de Vos (ed.),

Research at grass roots: a primer for the caring professions, pp. 224–235, Van Schaik, Pretoria.

De Vos, A.S., 2001, Research at grass roots: a primer for the caring professions, Van Schaik, Pretoria.

Denzin, N.K. & Lincoln, Y.S., 1994, Handbook of qualitative research, Sage, Thousand Oaks, C.A.

Department of Health, 1996, Restructuring the national health system for universal primary health care, Department of Health, Pretoria.

Department of Health, 1999, Health Sector Strategic Framework 1999-2004, Department of Health, Pretoria.

Department of Health, 2001a, The primary health care package for South Africa – a set of norms and standards, Department of Health, Pretoria.

Department of Health, 2001b, A comprehensive primary health care service package for South Africa, Department of Health, Pretoria.

Edwards, H., Smith, S., Courtney, M., Finlayson, K. & Chapman, H., 2004, ‘The impact of clinical placement location on nursing students’

competence and preparedness for practice’, Nurse Education Today 24(4), 248–255.

doi:10.1016/j.nedt.2004.01.003,

PMid:15110433

Fouché, C.B., 2001, ‘Data collection methods’, in A.S. de Vos (ed.), Research at grass roots: a primer for the caring

professions, pp. 152–177, Van Schaik, Pretoria.

Haas, B.K., Deardorff, K.U., Klotz, L., Baker, B., Coleman, J. & De Witt, A., 2002, ‘Creating collaborative partnership between academia

and service’, Journal of Nursing Education 41(12), 518–522.

PMid:12530563

Knowles, S.K., 1984, Andragogy in action: applying modern principles of adult learning. Jossey–Bass, San Francisco.

Magobe, N.B.D., 2005, ‘Guidelines to improve clinical competencies of learners of the programme PHC: clinical nursing, diagnosis,

treatment and care’, MCur dissertation, Department of Nursing, University of Johannesburg.

Magobe, N.B.D., Beukes, S. & Müller, A., 2010, ‘Reasons for students’ poor clinical competencies in the Primary Health Care:

Clinical nursing, diagnosis treatment and care programme’, Health SA Gesondheid 15(1), Art. #525, 6 pages.

doi:10.4102/ hsag.v15i1.525

Mofukeng, D.B., 1998, ‘Acceptability of clinical community nursing skills in mobile health services’, MCur dissertation,

Department of Nursing, Rand Afrikaans University.

Morolong, B.G., 2002, ‘Competency of the newly qualified registered nurse from a nursing college’, MCur dissertation, Department

of Nursing, Rand Afrikaans University.

Morton-Cooper, A. & Palmer, A., 2000, Mentoring, preceptorship and clinical supervision: a guide to professional support roles in clinical

practice, 2nd edn., Blackwell Science, Oxford.

Mouton, J., 1996, Understanding social research, Van Schaik, Pretoria.

Mouton, J. & Marais, H., 1990, Basic concepts in the methodology of the social sciences, Human Sciences Research Council, Pretoria.

Parahoo, K., 1997, Nursing research: principles, process and issues, Macmillan, Houndmills.

Polit, D.F. & Beck, C.T., 2004, Nursing research: principles and methods, 7th edn., Lippincott Williams &Wilkins, New York.

Polit, D.F. & Hungler, B.P., 1995, Nursing research: principles and methods, J.B. Lippincott, Philadelphia.

RAU. See Rand Afrikaans University.

Rand Afrikaans University, 2002, PHC: clinical nursing, diagnosis, treatment and care: Study guide, Department of Nursing, Johannesburg.

South Africa, 1978, Nursing Act, No. 50 of 1978 (as ammended), Government Printer, Pretoria.

SANC. See South African Nursing Council.

South African Nursing Council, 1984, Regulations relating to the keeping, supply, administering or prescribing of medicines by registered nurses

(Government Notice R2418), viewed 03 April 2003, from

www.sanc.co.za/regulat/Reg-med.htm

South African Qualifications Authority, 2000, SAQA Bulletin, Government Printer, Pretoria.

Uys, H.H.M. & Basson, A.A., 1995, Research methodology in nursing. Kagiso Tertiary, Pretoria.

|

|